Transferability in Qualitative Research

Published by at September 21st, 2021 , Revised On October 10, 2023

What is Transferability?

Generally speaking, the term ‘transferability’ is used for something transferable or exchangeable. In research, however, the term is used for a very specific concept. That concept is better known as reliability. So, if it’s popularly known as reliability, why call it transferability?

Lincoln and Guba (1985) suggested a couple of terms that are better suited than reliability is to represent what it represents (as will be discussed below). One of those terms is transferability.

It will be assumed in the later paragraphs, therefore, that while reliability is being discussed, it’s also a discussion of transferability and vice versa. They are both the same thing.

Did you know: Reliability in research goes by many other names too, such as ‘credibility’, ‘neutrality’, ‘confirmability’, ‘dependability’, ‘consistency’, ‘applicability’, and, in particular, ‘dependability’.

Reliability in Research – Definition

Reliability is an important principle in two main stages of research, data collection and findings. The term is generally applied to data collection tools i.e., whether they are reliable or not.

What this means is that whether a tool—suppose a questionnaire—will yield the same results if applied under different settings, circumstances, to a different sample, etc.

Reliability is, therefore, a measure of a test’s or tool’s consistency.

That is the case for what it means to have a reliable tool or instrument. But what about reliable results, or reliable scores?

Reliable findings imply that no matter which instrument or tool is used to measure a certain thing, the results that the tool will yield will remain the same. Again, this is only possible when the measuring tool is reliable, to begin with.

In yet simpler terms, reliability refers to reaching to same conclusions consistently or repeatedly.

Key takeaway: It’s safe to assume, therefore, that reliable findings/scores and reliable measuring tools are two sides of the same coin.

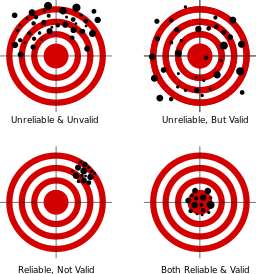

The following figure very effectively demonstrates what reliability is (about validity, as will be discussed later):

Reliability in Qualitative Research

When it comes to qualitative research, things like tests are commonly used. They can be in the form of pre-and post-tests that are administered on a sample, to observe the effects of something before and after exposure. Similarly, other qualitative tools like plotting graphs for the gathered data on test scores might be used as well.

Whichever qualitative method or tool is used, it has to be reliable.

Reliable Tests

Mostly, in research, tests are conducted in the form of questionnaires, surveys, etc. to gather information in the data collection stage. Those tests must remain reliable. In other words, if the same test is given to the same student or matches students on two different occasions, the test should yield similar results.

Test reliability means a test yields the same scores no matter how many times it’s administered, even if the test format is changed. Performance in a test might be affected by factors besides the abilities the test aims to measure (further discussed below).

Important to remember: Examination of reliability helps researchers tell apart the factors contributing most to a certain score, from the ones that have little to no effect.

Importance of Reliability in Qualitative Research

Researchers must obtain consistent results throughout the research process, no matter the type of instrument being used for data collection. However, reliability is more important than merely providing consistent results or scores throughout the process. It’s also important because of two other reasons:

1. First of all, reliability provides a measure of the extent to which a test-takers (any participant from the research sample, for instance) score reflects random/systematic measurement error. Observed Score = True Score + Error

Where,

Random error is an error that occurs due to random reasons and affects the results. Random factors include environmental factors, weather conditions, personal and/or psychological problems experienced by the researcher, and so on. Such errors affect results differently.

Systematic error is an error in a system. It affects the results in the same way. Such errors mostly include a fault in a measuring instrument; an incorrect marking scheme, etc.

The observed score is the total score that a test taker, for instance, gains on a test.

A true score is a score that is obtained, based on one’s knowledge.

Error: is the random or systematic error.

As the above formula shows, it is only when an error is accounted for that a researcher can tell what the actual, true score is. Once the error has been removed, the testing measure or tool can be deemed reliable or not. If, for instance, even after removal of the error, the observed scores are not obtained consistently, it means the test/tool is not reliable.

Key point: It’s easy to guess what ‘scores’ mean when it comes to quantitative research. They can be scores obtained from tests or some other statistical procedure. But what about scores in qualitative research? Even in qualitative research, quantitative methods might still be used, for instance, in the sampling and/or data interpretation stages.

2. The second reason to be concerned with reliability is that it is a precursor to test validity. That is, if scores can’t be assigned consistently, it is impossible to conclude that the scores accurately measure the domain they’re supposed to measure (validity).

Here, validity refers to the extent to which the inferences made from a test are justified and accurate. In other words, if a certain test/tool measures what it’s supposed to measure, it’s said to be valid. However, formally assessing the validity of specific use of a test/tool can be a difficult and time-consuming process.

Therefore, reliability analysis is often viewed as the initial step in the validation process. If the test/tool is unreliable, research won’t need to spend the time investigating whether it is valid; it won’t be. Contrarily, if the test has adequate reliability, a validation study would be worthwhile.

The key point to remember: Validity may be a sufficient but not necessary condition for reliability. However, reliability is an important condition for validity.

3. Quantitative research assumes the possibility of replication; if the same methods are used with the same sample, then the results should be the same. Typically, quantitative methods require a degree of control. This distorts the natural occurrence of phenomena.

Indeed, the premises of natural sciences include the uniqueness of situations, such that the study cannot be replicated – that is their strength rather than their weakness. However, this is not to say that qualitative research need not strive for replication in generating, refining, comparing, and validating constructs.

Therefore, just as replication and generalization of results are important in quantitative research, it might be just as important in certain types of qualitative researches, too. And hence, reliability is important in these kinds of studies also.

Types of Reliability in Qualitative Research

Now that the definition and importance of reliability have been established, it’s time to look at the multiple types of reliability that exist in research.

- Test takers’/participants’/respondents’ reliability

The test taker can be an individual from the sample, a student on whom the test is administered, a respondent of a questionnaire, or a participant of a survey. Naturally, all such individuals are human. They are therefore prone to experience stress, fatigue, anxiety, and other psychological and/or physiological factors. These factors might interfere and directly influence how these people perform on a test, survey, questionnaire, etc.

Also included in this category are such factors as a test-taker’s “test-wiseness” or strategies for efficient test-taking (Mousavi, 2002, p. 804).

- Rater reliability

This is further divided into two types:

- Inter-rater reliability: This implies whether two or more two raters, who are marking a test, questionnaire, etc., are reliable or not. It’s a measure of consistency between different raters’ ratings. For instance, if two or more than two of the raters/researchers come to the same score for a certain train in a psychological test, inter-rater reliability withholds.

It’s reliability within different raters.

- Intra-rater reliability: This implies whether or not the rater—the person who rates or ‘grades’ a certain test, summarises the scores of a questionnaire, etc.—is reliable or not. Are they under stress? Did they start rating after something bad happened to them? Are they qualified enough? Such factors can affect how a test or measuring tool is marked and in turn, may affect the results.

It’s reliability within the same rater.

- Item reliability: This is a measure of reliability used to evaluate the degree to which different test items that test the same construct produce similar results. This is because the test items may not be reliable. The items, for instance, maybe too easy or too difficult. Furthermore, they may not discriminate sufficiently between different members of the same sample of a population.

- Internal consistency reliability: As the name implies, this form of reliability is related to the format of a test, questionnaire, survey, etc. If different parts of a single test instrument led to different conclusions about the same thing, the test instrument’s internal consistency is low. This means the extent to which test questions measure the same skills or traits and the extent to which they’re related to one another is low.

- Test administrator reliability: There is reliability related to the test taker, test rater as well as test administration. Was the administration poorly managed in which the questionnaire or survey was conducted? Was there a lack of time to answer all the questions in the questionnaire? Did the questionnaires fall short of the total number of participants? Was there a problem with the photocopying of the same questionnaire?

All such factors directly influence test administrator reliability. If they’re mismanaged, this reliability will be reduced and vice versa.

- Test reliability: The rest itself can have some kind of error in it that can lower its reliability. For instance, in some researches, if the questionnaires given to the sample are too long, respondents might grow weary and not respond properly near the end, or not all at. Similarly, if the test is too complicated, respondents might ask others for help and copy off their answers to ignore the hassle of first understanding the question then comes up with an answer. Such factors lower a test’s reliability. This form of reliability is often overlooked in research, too.

How is Reliability Measured?

There are different methods of ensuring that the test, questionnaire, survey, or whichever other data collection tool is used, is very reliable. The methods depend on a couple of things, mainly the type of research, its objectives, sample type and so on.

However, the most common methods to determine and measure the reliability of a test or measuring tool are listed below. It should be noted that these are the general, most commonly used methods. There are variations to these methods themselves.

1. Test-retest reliability: This is a measure of reliability obtained by administering the same test twice over some time to a group of individuals. The scores obtained in the two tests can then be correlated to evaluate the test for stability over time.

This method is used to assess the consistency of a measuring instrument or test from one time to another; to determine if the score generalizes across time. The same test form is given twice and the scores are correlated to yield a coefficient of stability. High test-retest reliability tells us that examinees would probably get similar scores if tested at different times. The interval between test administrations is important—practice effects/learning effects.

2. Parallel or alternate form reliability: This is a measure of reliability obtained when research creates two forms of the same test by varying the items slightly. Reliability is stated as a correlation between scores of tests 1 and 2.

This method is used to assess the consistency of the results of two tests constructed in the same way from the same content domain; to determine whether scores will generalize across different sets of items or tasks. The two forms of the test are correlated to yield a coefficient of equivalence.

3. Internal consistency reliability: This method involves ensuring that the different parts within the same test or instrument measure the same thing, consistently. For example, a psychological test having two sections should be consistent in what it claims to measure, for instance, personal well-being. The first section could identify it via some questions, while the second section could include narrating a personal account.

4. Rater Reliability

- Inter-rater reliability: The reliability could be improved by making sure that there are no personal differences between the different rates, for instance, or that they are on the same page about their tasks and responsibilities.

- Intra-rater reliability: This reliability can be improved by ensuring, for instance, that the rater is not forced to rate a certain test after he/she went through something traumatic or is ill.

5. Item reliability: This reliability type will increase if the items or questions in a questionnaire, for instance, are different between different respondents; are easily understood by the respondents; aren’t too lengthy and so on.

Other Strategies to Improve Reliability of a Research Tool

To ensure their data collection test, tool or instrument is reliable, a research can:

- Objectively score tests by creating:

- Better questions

- Lengthening the test (but not too much)

- Manage item difficulty

- Manage item reliability

- Subjectively score tests by:

- Training the scorers/themselves

- Using a reasonable rating scale or techniques etc

- Both objectively and subjectively minimizing the bias by avoiding/promoting:

- To SEE the respondent in their image.

- To seek answers that support preconceived notions.

- Misperceptions (on the part of the interviewer) of what the respondent is saying.

- Misunderstandings (on the part of the respondent) of what is being asked.

Criteria for Reliability in Qualitative Research

In qualitative methodologies, reliability includes fidelity (faithfulness) to real life, context- and situation-specificity, authenticity, comprehensiveness, detail, honesty, depth of response, and meaningfulness to the respondents.

Denzin and Lincoln (1994) suggest that reliability as replicability in qualitative research can be addressed in several ways:

- Stability of observations: whether the researcher would have made the same observations and interpretations if observations had been done at a different time or in a different place.

- Inter-rater reliability: whether another observer with the same theoretical framework and observing the same phenomena would have interpreted them in the same way.

Conclusion

Reliability refers to the extent to which scores obtained on a specific form of a test, measuring tool or instrument can be generalized to scores obtained on other forms of the test or tool, administered at other times, or possibly scored by some other rater(s).

It’s a very important concept—for multiple reasons—to be considered during the data collection and interpretation stages of research. It’s also called transferability (of results) in qualitative research/

There are various types of reliability in research, each with its own dynamics. Certain criteria have to be met for a test or instrument to be reliable. There are many methods and strategies researchers can use to improve both the overall reliability as well as a specific type of reliability of their data collection tool.

Frequently Asked Questions

Transferability refers to the ability of knowledge, skills, or concepts learned in one context or situation to be applied effectively in a different context or situation, showcasing adaptability and practical utility beyond their original setting.